I originally wrote this as a unit of work to go with ISAs, which were the way that practical work was assessed on the 2009 specification. I think I now have an idea what the 2016 specification is after, and have rewritten this project to reflect the changes, hopefully I am not too far from what the examiners are thinking.

All posts by yttrium1

Types of Memory

I’ve already written about “Working Memory” which is so central to Cognitive Load Theory. However, the key thing which I felt was unanswered by CLT is how does our memory of the lesson as an event, relate to, become abstracted into a change in the putative schema associated with that lesson? I’d love to say that I now have an answer to this, but I do feel that I’m a bit closer. I was also taken in a different direction having found the work of Professors Gias and Born of Tübingen University who work on sleep and memory (related article).

Memory Types

A starting point was that I was confused by terms like Procedural Memory and Declarative Memory, and I didn’t really know that Semantic Memory and Episodic Memory were things I should be thinking about until I read Peter Ford’s blog.

Endel Tulving, who is widely credited with introducing the term “Semantic Memory”, commented, in 1972, that in one single collection of essays there were twenty-five categories of memory listed. No wonder I was confused.

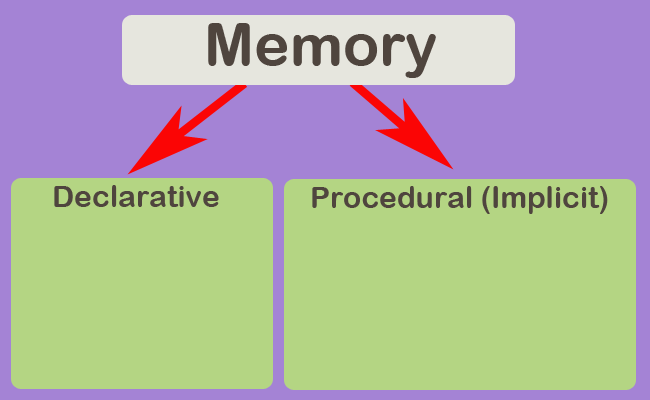

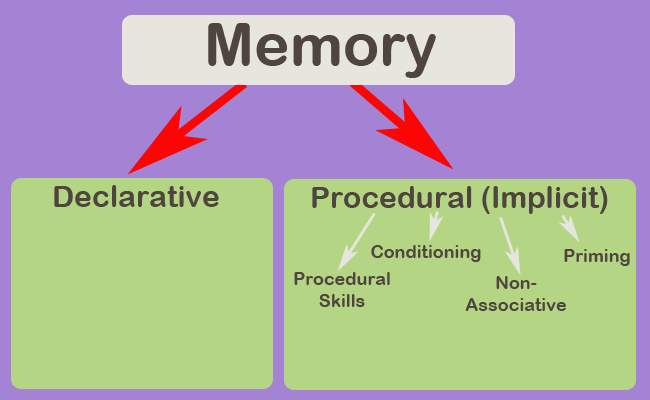

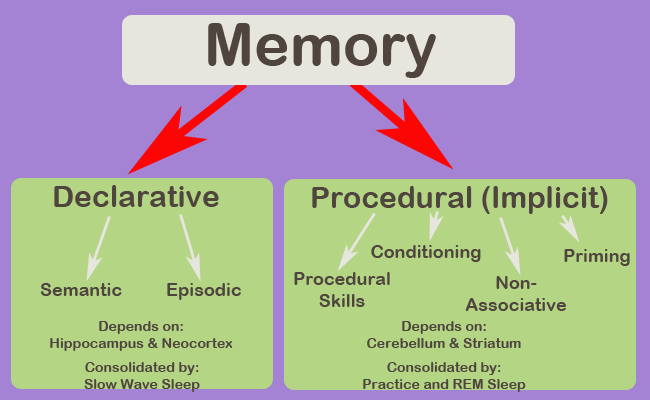

Today the main, usually accepted, split is between Declarative (or Explicit) and Procedural (or Implicit) Memories. Declarative Memory can be brought to mind – an event or definition, and Procedural Memory is unconscious – riding a bike.

Procedural Memory

The division between the two was starkly shown in the 1950s when an operation on a patient’s brain to reduce epilepsy led to severe anteriograde amnesia (inability to form new memories). That patient (referred to in the literature as H.M.) could, through practice, improve procedural skills (such as mirror drawing), even though he did not remember his previous practice (Acquisition of Motor Skill after Medial Temporal-Lobe Excision and discussed in Recent and Remote Memories).

Similar studies have identified several processes which obviously involve memory, but which are unaffected by deficits in the Declarative Memory System of amnesiacs and, sometimes, drunks.

- Procedural Skills (see Squire and Zola below)

- Conditioning (as in Pavlov’s Dogs)

- Non-associative learning (habituation, i.e. getting used to and so ignoring stimuli, and sensitisation, learning to react more strongly to novel stimuli)

- Priming (using one stimulus to alter the response to another)

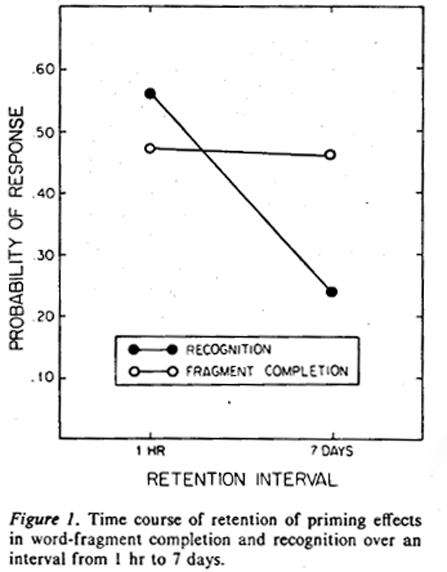

Priming was demonstrated by Tulving and co-workers in an experiment which I thought was interesting in a education context, he asked people to study a list of reasonably uncommon words without telling them why. They were then tested an hour later in two ways; with a new list and asked which words they recognised from the previous list, and by asking them to complete words with missing letters (like the Head to Head round in Pointless!) Unsurprisingly, words which had been on the study list were completed more effectively than new words (0.46 completion, vs 0.31). More interestingly, this over-performance on fragments of word completion due to having been primed by the study list was still present in new tests 7 days later. Performance on the recognition test, on the other hand, declined significantly over those seven days (see their Figure 1 below).

Tulving was using experiments designed by Warrington and Weiskrantz (discussed in “Implicit Memory: History and Current Status“), who found that amnesiacs did as well as their control sample in fragment completion, having been primed by a previous list which they did not remember seeing.

Tulving took the independence of performance on the word recognition tests and the fragment completion tests as good evidence that there were two different memory systems at play.

Squire and Zola give examples of learnt procedural skill tests which clearly requires memory of some type. For example:

In this task, subjects respond as rapidly as possible with a key press to a cue, which can appear in any one of four locations. The location of the cue follows a repeating sequence of 10 cued locations for 400 training trials. … amnesic patients and control subjects exhibited equivalent learning of the repeating sequence, as demonstrated by gradually improving reaction times and by an increase in reaction times when the repeating sequence was replaced by a random sequence. Reaction times did not improve when subjects were given a random sequence.

Structure and Function of Declarative and Nondeclarative Memory Systems

They also cite weather predictions from abstract cue cards, deducing whether subsequent letter sequences follow the same set of rules as a sample set, and identifying which dot patterns are generated from the same prototype, as procedural skills where amnesiacs perform as well as ordinary people despite not remembering their training sessions.

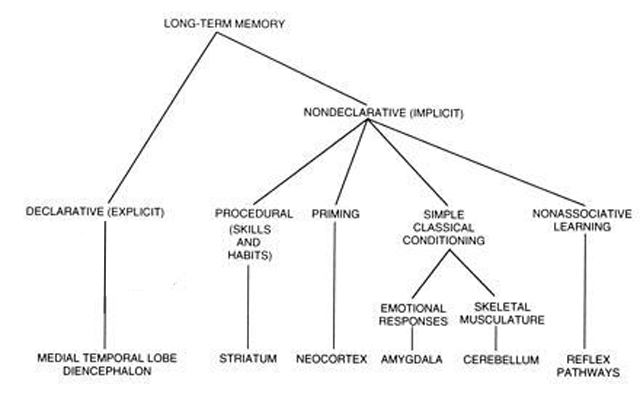

In fact such is the range of memory types identified Squire chooses to see “Procedural Memory as just a portion of the whole group and to refer to the whole group as “Non-Declarative”.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC33639/

Summary for Procedural (Non-Declarative or Implicit) Memory

In summary, Procedural or Implicit Memory, the memory element of unconscious skills or reactions, is limited, but is more than just motor skills or muscle memory. There are priming and pattern recognition elements which could be exploited in an education context. In fact, I do wonder if the testing effect (retrieval practice) is not a form of priming, and therefore non-declarative.

Declarative Memory

As suggested earlier Tulving is credited with introducing the idea of Semantic Memory. He envisaged three memory types, with Semantic Memory, what we teachers mean by knowledge, dependent on Procedural Memory, and the final type, Episodic Memory, memory of events, dependent on Semantic Memory. Episodic Memory and Semantic Memory are now regarded interrelated systems of Declarative Memory.

In her 2003 Review Sharon Thompson-Schill neatly illustrated the difference between the two types of declarative memory as:

The information that one ate eggs

Neuroimaging Studies of Semantic Memory, Sharon L. Thompson-Schill

and toast for breakfast is an example of episodic memory,

whereas knowledge that eggs, toast, cereal, and pancakes are

typical breakfast foods is an example of semantic memory.

Episodic memory

That Episodic Memory is dependent on the hippocampus structure and the surrounding Medial Temporal Lobe has been recognised since since its excision in patient H.M., and modern brain imaging studies such as those reviewed by Thompson-Schill confirm this. As well as having specific cells dedicated to encoding space and time, the hippocampus seems to integrate the sensory inputs from widely spread sensory and motor processing areas of the brain, recognising similarity and difference with previous memory traces and encoding them accordingly, although the memory traces that make up the episode remain widely distributed in the relevant sensorimotor areas.

Over time changes in the encoding of the memory reduce its dependence on the hippocampus. The memory of an episode ends up distributed across the neocortex (the wrinkled surface of the brain) without a hippocampal element, hence H.M. retaining previous memories. This change is called systems consolidation (see consolidation).

Semantic Memory

The structures underlying Semantic Memory, even its very existence, are less clear cut. The possibilities (see review by Yee et al) are:

- That Semantic Memories are formed in a separate process from Episodic memory (for example, directly from Working Memory to Long Term Memory via the Prefrontal Cortex). Tulving took this view, but the evidence from amnesiacs is that for them to form new Semantic Memory without Episodic Memory is a slow and limited process.

- That the brain abstracts information from Episodic Memory, and sorts and stores it by knowledge domain. There is some evidence supporting this; brain damage in specific areas can damage memory of specific categories of object (e.g. living vs non-living).

- That the process of abstraction is specific to the sense or motor skill involved, possibly with some other brain area (the Anterior Temporal Lobe – ATL) functioning as an over-arching hub which links concepts across several sensorimotor abstractions (Patterson et al for a review of the ATL as a hub) . This seems to be the most widely accepted theory because it best matches the brain imaging data. This shows semantic memory operations activating general areas like the ATL and the relevant sensorimotor brain areas, but with the exact area of activation shifting depending on the level of abstraction.

- That there is no such thing as Semantic Memory and that what seems like knowledge of a concept is in fact an artifact arising from the combined recall of previous episodic encounters with the concept. Such models of memory are called Retrieval Models…

……retrieval models place abstraction at retrieval. In

“Semantic Memory”, Eiling Yee, Michael N. Jones, Ken McRae

addition, the abstraction is not a purposeful mechanism

per se. Instead, abstraction incidentally occurs because

our memory retrieval mechanism is reconstructive.

Hence, semantic memory in retrieval-based models is

essentially an accident due to our imperfect memory

retrieval process.

Both of the latter two possibilities have knowledge rooted in sensorimotor experience (embodied). How are purely abstract concepts treated in an embodied system? The evidence shows that abstract concepts with an emotional content, e.g. love, are embodied in those areas which process emotion, but those with less emotional content, e.g. justice, are dealt with more strongly in those regions of the brain known to support language:

It has therefore been suggested that abstract concepts for which emotional and/or sensory and motor attributes are lacking are

more dependent on linguistic, and contextual/situational information. That is, their mention in different contexts (i.e., episodes) may gradually lead us to an understanding of their meaning in the absence of

sensorimotor content. Neural investigations have supported at least the linguistic portion of this proposal. Brain regions known to support

language show greater involvement during the processing of abstract relative to concrete concepts.

“Semantic Memory”, Eiling Yee, Michael N. Jones, Ken McRae

Consolidation and Sleep

Memory consolidation is thought to involve three processes operating on different time scales:

- Strengthening and stabilisation of the initial memory trace (synaptic consolidation – minutes to hours))

- Shifting much of the encoding of the memory to the neo-cortex (systems consolidation – days to weeks).

- Strengthening and changing a memory by recalling it (reconsolidation – weeks to years)

Clearly all three of these are vital to learning, but the discoveries, and the terms for the processes involved, as used in neuroscience, have not yet made much of an impact on educational learning theory.

Synaptic Consolidation

Much of the information encoded in a memory is thought to reside in the strengths of the synaptic interconnections in an ensemble of neurons. The immediate encoding seems to be via temporary (a few hours) chemical alterations to the synapse strength. Conversion to longer term storage begins to happen within a few minutes by the initiation of a process called Long Term Potentiation (LTM), where new proteins are manufactured (gene expression) which make long lasting alterations to synapse strength.

Tononi and Cirelli have hypothesised that sleep is necessary to allow the resetting and rebuilding of the resources necessary for the brain’s ability to continually reconstruct its linkages in this way (maintain plasticity).

Systems Consolidation

Although subject to LTM the hippocampus is thought to only be a temporary store, Diekelmann and Born refer to it as the Fast Store which through repeated re-activation of memories trains the Slow Store within the neo-cortex. This shift away from relying on the hippocampus for the integration of memory is Systems Consolidation.

It is the contention of Jan Born’s research group that this Systems Consolidation largely occurs during sleep. They argue further that while Procedural Memory benefits from rehearsal and reenactment in REM sleep (which we usually fall into later during our sleep), it is the Slow Wave Sleep (SWS), which we first fall into, which eliminates weak connections, cleaning up the new declarative memories, and…

…. the newly acquired memory traces are repeatedly re-activated and thereby become gradually redistributed such that connections within the neocortex are strengthened, forming more persistent memory representations. Re-activation of the new representations gradually adapt them to pre-existing neocortical ‘knowledge networks’, thereby promoting the extraction of invariant repeating features and qualitative changes in the memory representations.

“The memory function of sleep” Susanne Diekelmann and Jan Born

Born is pretty bullish about the importance of sleep, in an interview with Die Zeit he said

Memory formation is an active process. First of all, what is taken during the day is stored in a temporary memory, for example the hippocampus. During sleep, the information is reactivated and this reactivation then stimulates the transfer of the information into the long-term memory. For example, the neocortex acts as long-term storage. But: Not everything is transferred to the long-term storage. Otherwise the brain would probably burst. We use sleep to selectively transfer certain information from temporary to long-term storage.

(Translation by Google).

“Without sleep, our brains would probably burst” Zeit Online

Reconsolidation

The idea that memories can change is not new, but the discovery in animals that revisiting memories destabilises them making them vulnerable to change or loss, has opened up new possibilities, such as the removal or modification of traumatic memories.

Nader et al’s discovery was that applying drugs which suppress protein manufacture to an area supporting a revisited memory left it vulnerable to disruption. This suggests that LTM-like protein production is necessary to reconsolidate a memory (make it permanent again) once that memory has been recalled, until then it can be changed.

That previously locked down memories become vulnerable to change (labile) when they are re-accessed, (if the process applies to semantic memory) might be the pathway for altering/improving memories which are revisited during revision, or might allow for the rewiring of stubborn misconceptions.

Conclusions / Implications for Teaching Theory

1 It is clear from the above that memory formation is not the very simplified “if it is in Working Memory it ends up in Long Term Memory” process that tends to be assumed as an adjunct to Cognitive Load Theory. However, a direct link between a Baddeley-type WM model and Long Term Memory formation is not precluded by any of the above. Many of the papers which I looked at stressed the importance of the Pre-Frontal Cortex (PFC), which is usually taken to the be site of Executive Control and possibly Working Memory, in memory formation. Blumemfeld and Ranganath, for example, suggest that the ventrolateral region of the PFC is playing a role in selecting where attention should be directed and in the process increasing the likelihood of successful recall.

2 I think it is clear that there is no evidence in the above for schema formation. However, there are researchers who believe that their investigations using brain scans and altering brain chemistry do show schema formation (the example given, and others, were cited by Ghosh and Gilboa). I have not included these studies, because I was not convinced I understood them, they did not appear in the later reviews I was reading, and they do not have huge numbers of citations according to Google Scholar.

3 What I do think the above does provide is a clear answer to the “do skills stand alone from knowledge” debate. A qualified no.

Procedural or Implicit memory does not depend on declarative memory – knowledge, and so those skills which fall within Procedural Memory, like muscle memory (Squire’s Skeletal Musculature Conditioning), are stand-alone skills.

These are the memories/skills which benefit most strongly from sleep, in fact Kuriyama et al found that the more complex the skill, the more sleep helped embed it. This might be a useful finding in some areas of education, e.g. introducing a new manipulative or sporting skill, but only using it to a day or two later, once sleep has had an effect.

However, Procedural Memory as discussed by a neuro-scientist is not the “memory of how to carry out a mathematical procedure” that a maths teacher might mean by procedural memory. This maths teacher meaning is purely declarative. Similarly the skill of reading a book rests upon declarative memory of word associations (amnesiacs with declarative memory impairment can only make new word associations very slowly).

4 I was surprised that “the immediate memory, to fast memory, to slow memory” process that molecular and imaging studies of the brain have elucidated does not match the model of Short Term Memory to Long Term Memory which I thought I knew from psychology. But I am not certain yet what difference that makes to my teaching, other than to think that we do not take sleep into account anything like enough when scheduling learning. Should we be moving on to new concepts, or even using existing concepts, before we have allowed sleep to act upon them? Do we risk concepts being overwritten in the hyppocampus by moving along too quickly?

5 Finally I thought that Reconsolidation was interesting. It might not make a big difference to the way we teach, but it might explain good practice, like getting the students to recall their misconception before attempting to address it. Reconsolidation could be a fertile area of investigation, I am sure that there must be lots of uses for it which I have not thought of.

That’s it. You have made it to the end, well done. Sorry that what started out as a small research project for myself turned into something larger. I had not realised quite how big a subject memory was, I’m not sure I’ve even scratched the surface.

If you want to read more about the nature of memory, consolidation and particularly LTM I recently found this 2019 review:

Is plasticity of synapses the mechanism of long-term memory storage?

AfL, Feedback and the low-stakes testing effect

Assessment for Learning is never far from a UK teacher’s mind. We all know of the “purple pen of pain” madness that SLTs can impose in the hope that their feedback method will realise AfL’s potential and, most importantly, satisfy the inspectors.

I’ve read a couple of posts recently wondering why AfL has failed to deliver on the big improvements that its authors hoped for. AfL’s authors themselves said:

if the substantial rewards promised by the evidence are to be secured, each teacher must find his or her own ways of incorporating the lessons and ideas that are set out above into his or her own patterns of classroom work. Even with optimum training and support, such a process will take time.

but it has now been 20 years since Black and Wiliam wrote those words in “Inside the Black Box” and formative feedback has been a big push in UK schools ever since.

The usual assumption is that we have still not got formative assessment correct. An example of this can be found in the recent work of eminent science teachers who have blogged about how formative assessment is not straightforward in science. Adam Boxer started the series with an assessment of AfL’s failure to deliver, and his has been followed by thoughtful pieces about assessment and planning in science teaching.

Thinking about AfL brought me back to the concerns I expressed here about the supposedly huge benefits from feedback and made me realise that I’ve never looked at any of the underlying evidence for feedback’s efficacy.

The obvious starting place was Black and Wiliam’s research review which had an entire issue of “Assessment in Education” devoted to it:

Black had moved from a Physics background into education research, and had a specific interest in designing courses that had formative processes built into their assessment scheme. Courses and ideas which were wiped out by the evolution of GCSEs in the 1990s. As they put it “as part of this effort to re-assert the importance of formative assessment” Black and Wiliam were commissioned to conduct a review of the research on formative assessment, and they used their experience of working with teachers to write “Inside the Black Box” for a wider audience.

I have to wonder, would someone else looking at the same research, without “formative assessment” as their commissioned topic arrive at the same conclusions?

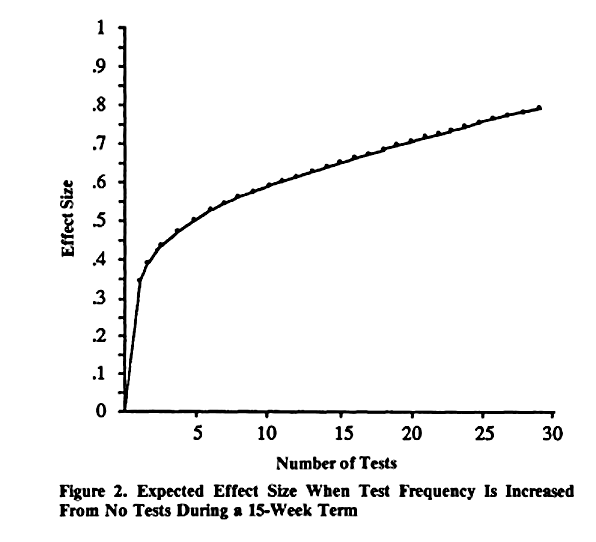

Would some one else instead conclude that “frequent low-stakes testing is very effective” was the important finding of the research on feedback literature? Certainly testing frequency’s importance is clear to the authors who Black and Wiliam cite. In fact one of the B-D and Kuliks papers is entitled “Effects of Frequent Classroom Testing” and contains this graph:

Which is a regression fit of the effect sizes that they found for different test frequencies.

Which is a regression fit of the effect sizes that they found for different test frequencies.

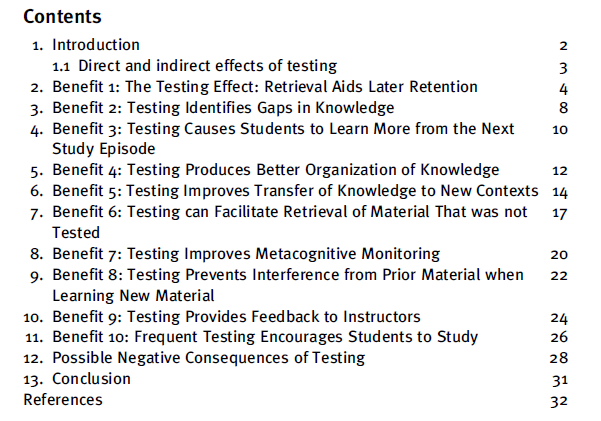

The low-stakes testing effect was pretty well established then, it is very well established now, for example just the contents of “Ten Benefits of Testing and Their Applications to Educational Practice” makes the benefits pretty clear:

Where would we be today if Black and Wiliam had promoted low-stakes testing twenty years ago rather than formative assessment? Quite possibly nothing would have changed, they themselves profess to be puzzled as to why they had such a big impact, maybe formative assessment was just in tune with the zeitgeist of the time and if it were not Black and Wiliam it would have been someone else. But just possibly, if my interpretation is correct – without the testing effect the evidence for feedback is pretty weak -, we might be further on than we are right now.

Testing the Wave Equation in a Gratnells Tray

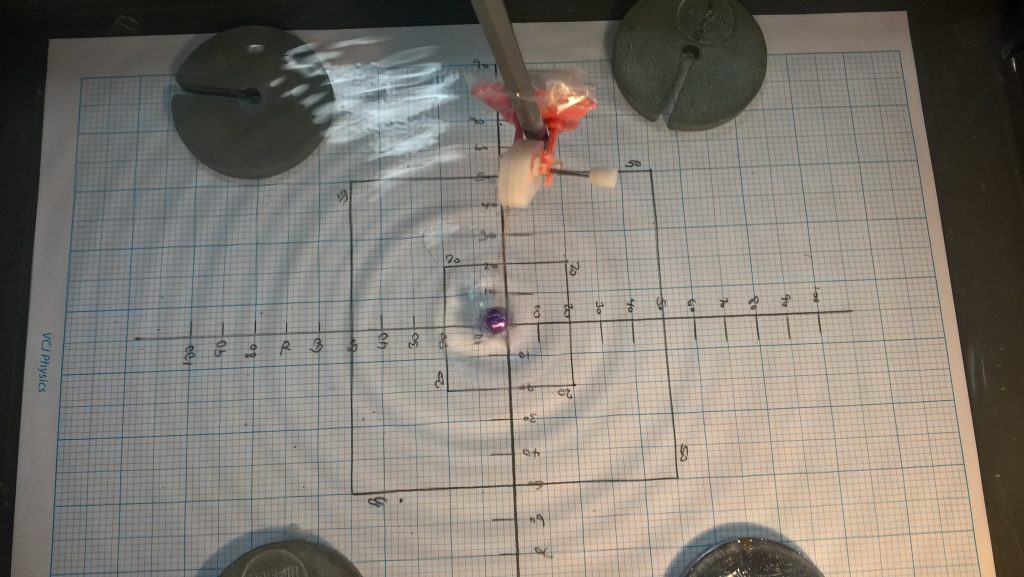

Ripple tank experiments are not really class practicals, they are usually demos, but we do a block of work on Waves in Y8 and again in Y10 and so I wanted some practical work to go with it. The fact that the GCSE has a required waves practical that is really only a demo added impetus to my thinking.

I started with the classic AQA A level ISA experiment (PHY-3T-Q09) where multiple crossings of a gratnells tray are timed and the waves’ speed is calculated, and tried to build something from there.

By adding clockwork dippers made from chattering teeth toys to make a wave train, we got a set of practicals that work quite well. However, the match between the measured wave speed and calculated wave speed from the wave equation is far from perfect. I think there are probably two reasons; the dipper frequency varies quite a lot (we could extend things by getting students to measure their own dipper frequency instead of demoing the measurement of one, as has been our practice so far), and I have a suspicion that the single waves do actually run a bit faster than the wave trains made by the dipper.

The worksheets (follow the link below) are for four or five lessons and are deliberately tough. We leave higher groups pretty much to their own devices with them and give more help to lower groups. We’ve tried it with several groups now and think it has some value.

I’ve left in the notes for the two speed of sound experiments, which we only do, a bit later on in the course, if we have time.

If more of us take this up then I am hoping someone will have a good idea for making a more reliable dipper, or even get someone to manufacture one.

You say Schemas, I say Schemata

Well actually I don’t, I say Schemas. I honestly didn’t realise, until I started researching them, that schemata was the plural of schema!

Anyway why are they significant? Well in my post about Working Memory I said Baddeley asserted that amongst the limited number of items being held in the Working Memory, and therefore available for manipulation and change, could be complex, interelated ideas; schemas.

Allowing more complex memory constructs to be accessed is particularly important for Cognitive Load Theory, because if working memory is really limited to just four simple things, numbers or letters, then Working Memory cannot be central to the learning process in a lesson. What is going on in a lesson is a much richer thing than could captured in four numbers. The escape clause for this is to allow the items stored in, or accessed by, Working Memory to be Schemas or schemata.

A schema is then a “data structure for representing generic concepts stored in memory” and they “represent knowledge at all levels of abstraction” (Rumelhart: Schemata The Building Blocks of Cognition 1978).

How We Use Schemas

To give an example of what schema is and how it is used we can take an example from Anderson (when researching this I was pointed at Anderson by Sue Gerrard), he developed the schema idea in the context of using pre-exisiting schemas to decode text.

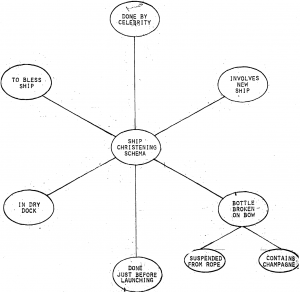

In 1984 Anderson and Pearson gave an example containing British ship building and industrial action, something only those of us from that vintage are likely to appreciate. The key elements that Anderson and Pearson think someone familiar with christening a ship would have built up into a schema are shown in the figure:

They then discusses how that schema would be used to decode a newspaper article:

‘Suppose you read in the newspaper that,

“Queen Elizabeth participated-in-a long- delayed ceremony

in Clydebank, Scotland yesterday. While there is bitterness here following the protracted strike, on this occasion a crowd of shipyard workers numbering in the hundreds joined dignitaries in cheering as the HMS Pinafore slipped into the water.”

It is the generally good fit of most of this information with the SHIP CHRISTENING schema that provides the confidence that (part of) the message has been comprehended. Particular, Queen Elizabeth fits the <celebrity> slot, the fact that Clydebank is a well known ship building port and that Shipyard workers are involved is consistent with the <dry dock> slot, the HMS Pinafore is obviously a ship and the information that it “slipped into the “water”,is consistent With the <just before launching> slot. Therefore, the ceremony mentioned is probably a ship christening. No mention is made of a bottle of champagne being broken on the ship’s bow, but this “default” inference is easily made.’

They expand by discussing how more ambiguous pieces of text with less clear cut fits to the “SHIP CHRISTENING” schema might be interpreted.

Returning to Rumelhart, we find the same view of schemas as active recognition devices “whose processing is aimed at the evaluation of their goodness of fit to the data being processed.”

Developing Schemas

In his review Rumelhart states that:

‘From a logical point of view there are three basically different modes of learning that are possible in a schema-based system.

- Having understood some text or perceived some event, we can retrieve stored information about the text or event. Such learning corresponds roughly to “fact learning” Rumelhart and Norman have called this learning accretion

- Existing schemata may evolve or change to make them more in tune with experience….this corresponds to elaboration or refinement of concepts. Rumelhart and Norman have called this sort of learning tuning

- Creation of new schemata…. There are, in a schema theory, at least two ways in which new concepts can be generated: they can be patterned on existing schemata, or they can in principle be induced from experience. Rumelhart and Norman call learning of new schemata restructuring‘

Rumelhart expands on accretion:

‘thus, as we experience the world we store, as a natural side effect of comprehension, traces that can serve as the basis of future recall. Later, during, retrieval we can use this information to reconstruct an interpretation of the original experience -thereby remembering the experience.’

And tuning:

‘This sort of schema modification amounts to concept generalisation – making a schema more generally applicable.’

He is not keen on “restructuring”, however, because Rumelart regards a mechanism to recognise that a new schema is necessary as something beyond schema theory, and regards the induction of a new schema as likely to be a rare event.

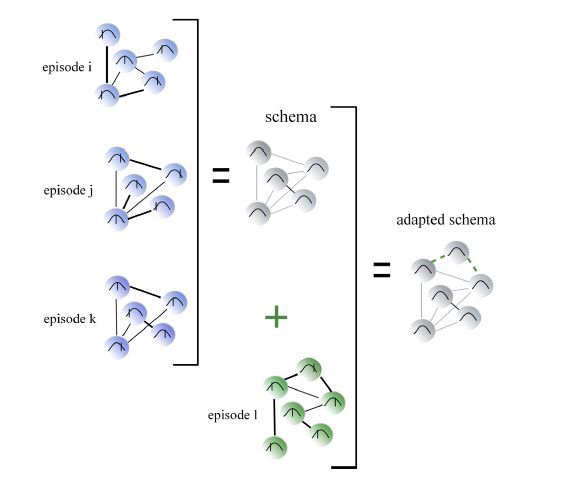

A nice illustration (below) of Rumelhart’s tuning – schemas adapting and becoming more general as overlapping episodes are encountered – comes from Ghosh and Gilboa’s paper which was shared by J-P Riodan. Ghosh and Gilboa’s work looks at how the psychological concept of schema as developed in the 70s and 80s might map onto modern neuroimaging studies, as well as giving a succinct historical overview.

If accretion, tuning and restructuring is starting to sound a bit Piagetian to you “assimilation and accomodation” for “tuning and restructuring” then you would be right. Smith’s Research Starter on Schema Theory (I’ve accessed Research Starters through my College of Teaching Membership) says that Piaget coined the term schema in 1926!

It would be a lovely irony if the idea that allowed Cognitive Load Theory to flourish were attributable to the father of constructivists. However, Derry (see below) states that there is little overlap in the citations of Cognitive Schema theorists, like Anderson and later Sweller, and the Piaget school of schema thought, so Sweller probably owes Piaget for little except the name.

Types of Schema

In her in her 1996 review of the topic Derry finds three kinds of Schema in the literature:

- Memory Objects. The simplest memory objects are named p-prims by diSessa (and have been the subject of discussion amongst science teachers see for example E=mc2andallthat). However, above p-prims are “more integrated memory objects hypothesized by Kintsch and Greeno, Marshall and Sweller and Cooper, and others, these objects are schemas that permit people to recognise and classify patterns in the external world so they can respond with the appropriate mental of physical actions.” (Unfortunately I can’t find any access to the Sweller and Cooper piece.)

- Mental Models. “Mental model construction involves mapping active memory objects onto components of the the real-world phenomenon, then reorganising and connecting those objects so that together they form a model of the whole situation. This reorganizing and connecting process is a form of problem solving. Once constructed, a mental model may be used as a basis for further reasoning and problem solving. If two or more people are required to communicate about a situation, they must each construct a similar mental model of it.” (Derry connects mental models to constructivist teaching and again references DiSessa – “Toward an Epistemology of Physics”, 1993)

- Cognitive Fields. “…. a distributed pattern of memory activation that occurs in response to a particular event.” “The cognitive field activated in a learning situation .. determines which previously existing memory objects and object systems can be modified or updates by an instructional experience.”

Now it is not clear to me that these three are different things, they seem to represent only differences in emphasis or abstraction. CLT would surely be interested in Cognitive Fields, but Derry associates Sweller with Memory Objects. While Mental Models seems to be as much about the tuning of the schema as the schema itself.

DiSessa does, however, have a different point of view when it comes to science teaching. Where most science teachers would probably see misconceptions as problems built-in at the higher schema level, DiSessa sees us as possessing a stable number of p-prims whose organisation is at fault, and that by building on the p-prims themselves we can alter their organisation.

Evidence for Schemas

There is some evidence for the existence of schemas. Because interpretation of text is where schema theory really developed, there are experiments which test interpretation or recall of stories or text in a schema context. For example Bransford and Johnson 1972 conducted experiments that suggest that pre-triggering the corrrect Cognitive Field before listening to a passage can aid its recall and understanding.

Others have attempted to show that we arrive at answers to problems, not via logic but by triggering a schema. One approach to this is via Wason’s Selection Task which only 10% of people correctly answer (I get it wrong every time). For example Cheng et al found that providing a context that made sense to people led them to the correct answer, and argue that this is because the test subjects are thus able to invoke a related schema. They term higher level schemas used for reasoning, but still rooted in their context “pragmatic reasoning schemas”.

I am not sure that any of the evidence is robust, but schemas/schemata continue to be accepted because the idea that knowledge is organised and linked at more and more abstract levels offers a lot of explanatory power in thinking about what is happening in one’s own head, and what is happening in learning more generally.

Schema theory also fits with the current reaction against the promotion of the teaching of generic skills. If we can only think successfully think about something if we have a schema for it, and schemas start off at least as an organisation of memory objects, then thought is ulitmately rooted in those memory objects, thought is context specific. I suspect that this may be what Cheng et al‘s work is confirming for us.

Introduction to Lower Sixth Physics Document

In 2017 we have been given more time in the lower sixth to teach Physics, we are now up to five hours a week. To take advantage of this I have written a “Mathematical Methods Introduction”. It is meant to be a hard introduction to the kind of maths we do in Physics, but its content that is slightly tangental to the course; there was no point in repeating what we already do.

Notes for Y8 Energy

These are currently (August 2017) incomplete because I need to add in some HW exercises.

The Waves portion for Y8 is my next job.

Energy purists will notice that I haven’t adopted the energy newspeak. This because we use CIE for our GCSE; CIE have not yet indicated that they will go over to newspeak. I think (having looked at their sample exams) that someone who used this approach would be OK in taking the new AQA GCSE, which is deliberate because some of our boys change schools and because CIE might change eventually.

Working Memory

Working Memory

So if you haven’t come across the concept of working memory, you really haven’t been paying attention. Understanding Working Memory provides the panacea for all your teaching ills:

- David Didau (@DavidDidau) has used the concept to discuss problems that might come from “Reading Along“.

- Greg Ashman (@greg_ashman) champions its use because it underlies “Cognitive Load Theory”.

- Baddeley’s interpretation of Working Memory provides a theoretical underpinning of Mayer’s “Dual Coding” – you learn more from the spoken word with pictures – which is all over the place at the moment.

It is a straightforward concept – we can only give attention to so much at any one time.

It seems clear to me that theories which aim to understand and allow for what could be a major bottle-neck in the cognitive process have real potential to inform teaching practice. In this post I discuss my interpretation of evidence for and the models of Working Memory. [Note that the evidence discussed here is from psychology. There is starting to be a lot more evidence about memory from neuro-imaging which I begin to discuss here.]

Any discussion of the application of WM to theories of teaching will have to wait for a later post.

Baddeley’s Original Model

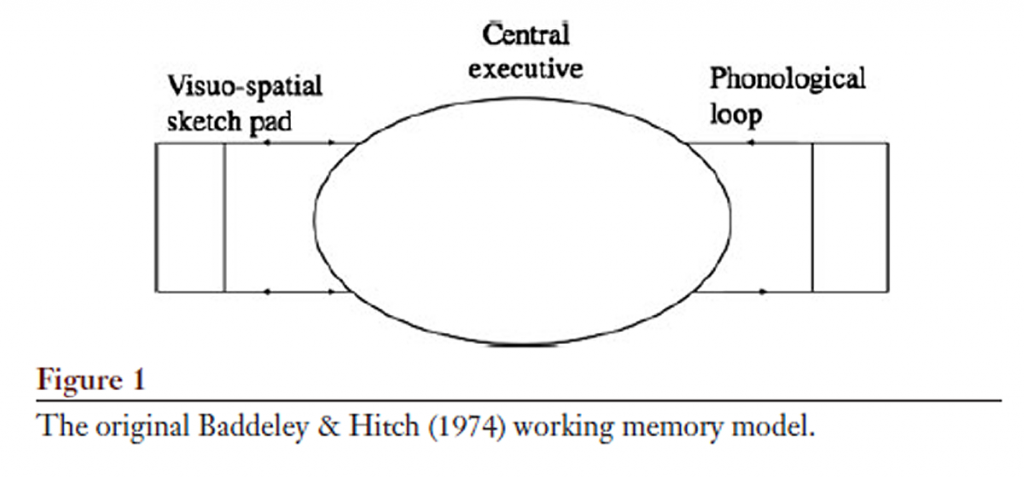

Academic papers on Working Memory usually start by referencing Baddeley & Hitch’s 1974 paper; this proposed three elements to working memory:

- The Visuo-spatial sketch pad (a memory register for objects and position)

- The Phonological Loop (a memory register for spoken language)

- and a Central Executive (control)

The working memory (WM) concept grew out of “dual memory” models, the idea that Short Term Memory (STM) and Long term Memory (LTM) were very different things. Baddeley and Hitch were working on experiments to test the interface between the two. In an autobiographical review from 2012 Alan Baddeley describes how their three element model arose from experiments such as trying to break down students’ memory for spoken numbers with visual tasks:

“In one study, participants performed a visually presented grammatical reasoning task while hearing and attempting to recall digit sequences of varying length. Response time increased linearly with concurrent digit load. However, the disruption was far from catastrophic: around 50% for the heaviest load, and perhaps more strikingly, the error rate remained constant at around 5%. Our results therefore suggested a clear involvement of whatever system underpins digit span, but not a crucial one. Performance slows systematically but does not break down. We found broadly similar results in studies investigating both verbal LTM and language comprehension, and on the basis of these, abandoned the assumption that WM comprised a single unitary store, proposing instead the three-component system shown in Figure 1 (Baddeley & Hitch 1974).”

In the 1970s “cognitive science” had begun to influence “cognitive psychology”, and cognitive science is very much about using our ideas about computers to help understand the brain, and our ideas about the brain to advance computing (AI). Those of us old enough to remember the days when computing was severely limited by the capabilities of the hardware will recognise the Cog. Sci. ideas that informed this hypothesis. The limitations of the very small memory which held the bits of information your CPU was directly working on could slow down the whole process (even today, with the great speed of separate computer memory, processors still have “on-chip” working memory known as the “scratch pad”). Equally, a similar limitation will be familiar to anyone who makes a habit of doing long multiplication in their head; there’s only so much room for the numbers you are trying to remember so they can be added up later limiting your ability to perform the task. As such the limited Working Memory idea has instinctive appeal; the WM is limited, the LTM is not, but the LTM is not immediately accessible.

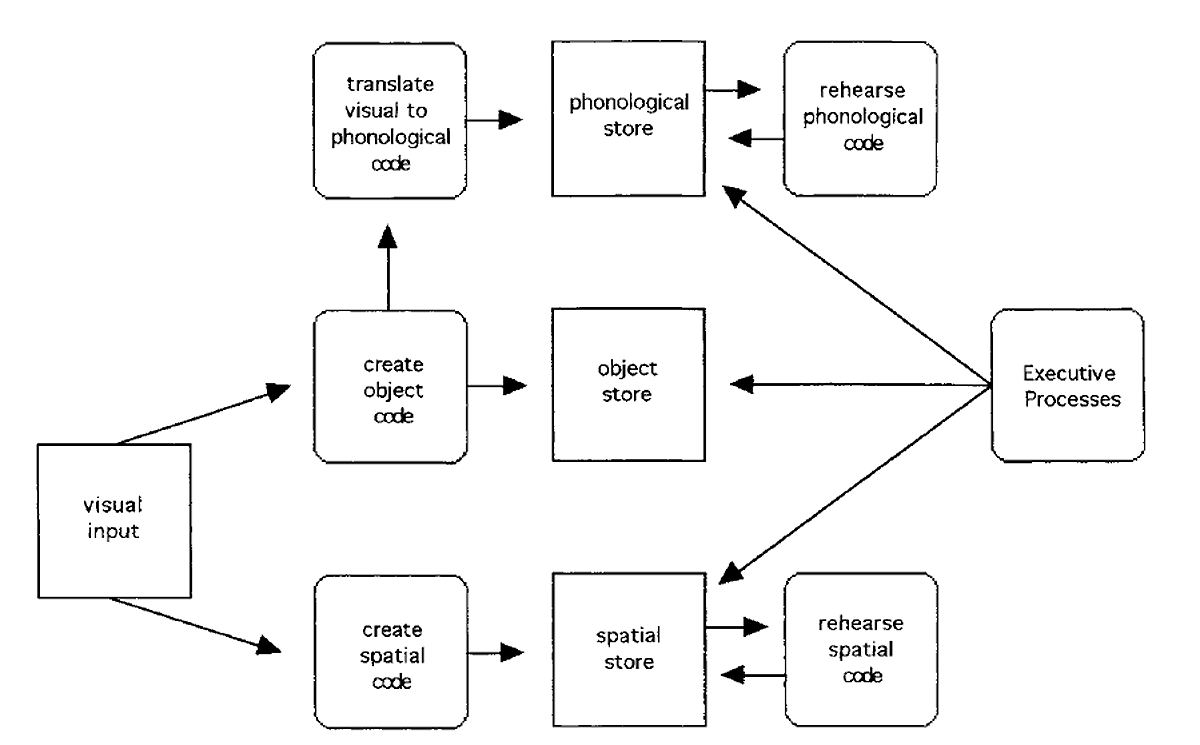

That there are two separate memory registers connected to different senses gives rise to all sorts of opportunities for designing learning with the two being used in parallel – dual coding. But does beg the question why stop there? A well cited 90s paper uses PET scans to identify three brain areas which might represent separate Working Memory caches for language, objects and position, leading them to propose a four way model just for visual information:

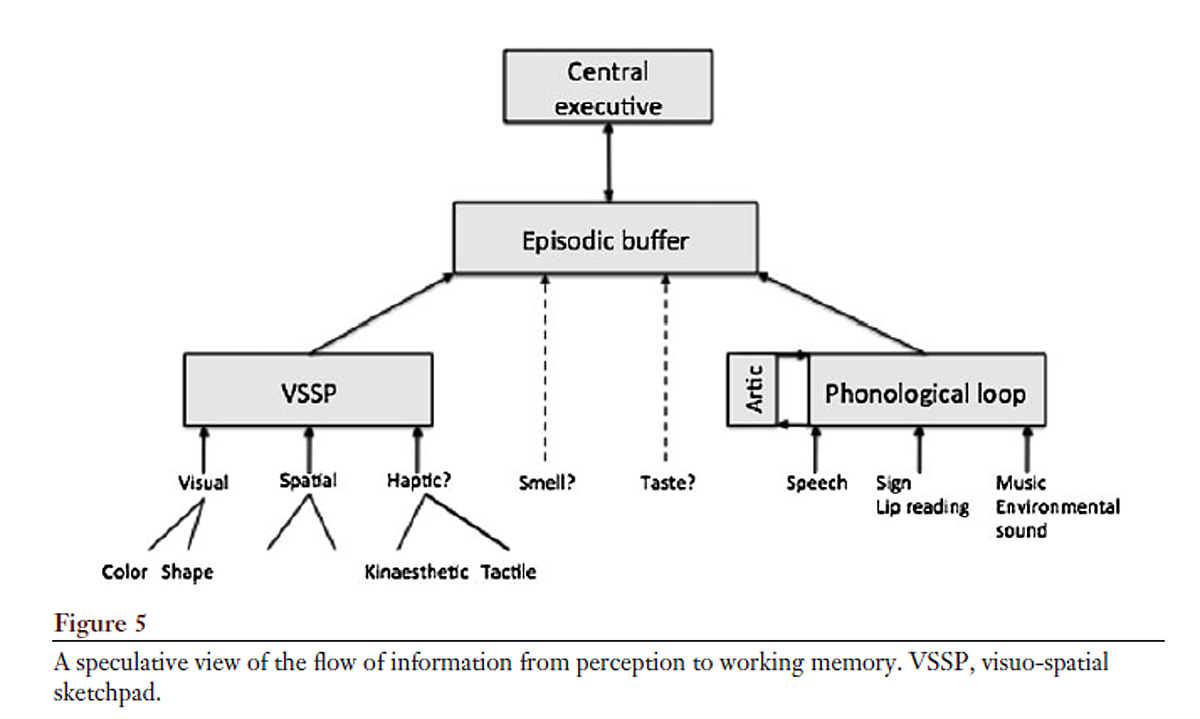

And in his 2012 review Baddeley himself proposes that something more complex might form the basis for future research:

Who knows, a model such as the one Baddeley speculates about above might even provide a theoretical basis for learning styles!

Evidence for a Limited Working Memory

Experimental studies into Working Memory generally take the form of exercises whereby a task such as correcting grammar or doing sums is used to suppress rehearsal. If you are doing something else you can’t be repeating what you need to remember over and over. Subjects are then tested on how many letters, words, numbers, shapes, spacings they can remember at the same time as doing this second task. The number varies a little with the task and with the model the researcher favours, but 4-7 is typical.

Experiments find working memory capacity to be consistent over time with the same subjects, and reasonably consistent when the mode of the test, language rather than maths, for example, is changed.

Scores in these tasks (dubbed Working Memory Capacity, WMC) have also been found to not only correlate with g a measure of “fluid intelligence”, but also correlate with a range of intellectual skills:

“Performance on WM span tasks correlates with a wide range of higher order cognitive tasks, such as reading and listening comprehension, language comprehension, following oral and spatial directions, vocabulary learning from context, note taking in class, writing, reasoning, hypothesis generation, bridge playing, and complex-task learning.” (in-text references removed for clarity)

The consistency of Working Memory Span Task outcomes and the breadth of skills that they correlate with strongly indicates that something important is being measured. That there is a limit to “working memory” seems hard to question. My reading has not led me to lots of evidence that there are two different and separate registers (Visuo and Phonological), but that may be a consequence of my paper selection being skewed by the firewalls that restrict access to research.

Other Models

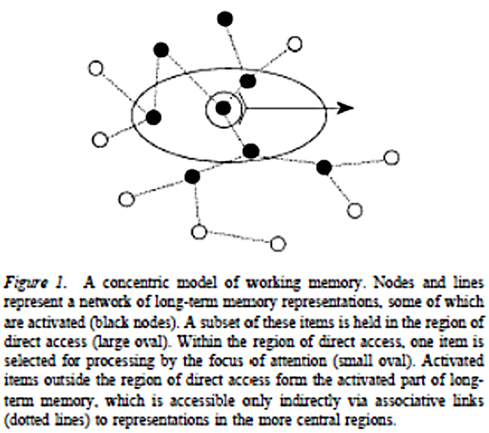

A prolific author of papers on Working Memory and acknowledged by Baddeley, even though he puts a quite different emphasis on the conclusions that can be drawn from the tasks discussed above, is Nelson Cowan. Cowan views the WM Span tests as measuring a subject’s ability to maintain focused attention in the face of distractions. He models the Working Memory as portions of the Long Term Memory that have been activated by that focus upon them, but my reading of a little of his work is that he is less concerned with the mechanisms of memory than with “focus of attention” as a generalised capability:

“A great deal of recent research has converged on the importance of the control of attention in carrying out the standard type of WM task involving separate storage and processing components….. (1) These WM tasks correlate highly with aptitudes even when the domain of the processing task (e.g., arithmetic or spatial manipulation) does not match the domain of the aptitude test (e.g., reading). That is to be expected if the correlations are due to the involvement of processes of attention that cut across content domains. (2) An alternative account of the correlations based entirely on knowledge can be ruled out. Although acquired knowledge is extremely important for both WM tasks and aptitude tasks, correlations between WM tasks and aptitude tasks remain even when the role of knowledge is measured and controlled for. (3) On tasks involving memory retrieval, dividing attention impairs performance in individuals with high WM spans but has little effect on individuals with low WM spans” (in-text references removed for clarity)

Cowan’s model is at the lower end for the number of items that can be stored in the working memory – four, but as Baddeley says “Importantly, however, this is four chunks or episodes, each of which may contain more than a single item“.

The nice thing about Cowan’s model is that it addresses the link to Long Term Memory, which as teachers is the thing that we hope to impress new ideas upon. Fledmann Barette et al who follow this “control of focus of attention” model for differences in WMC between individuals state:

“WM is likely related to the ability to incorporate new or inconsistent information into a pre-existing representation of an object”

i.e. WM is likely central to learning.

What Cowan’s model does not include is any suggestion of different registers for different kinds of information. This may, however, be because his work is about focus of attention, not memory structure.

Related to Cowan’s model and also acknowledged by Baddeley in his 2012 review is Oberauer’s work which says:

“1. The activated part of long-term memory can serve, among other things, to memorize information over brief periods for later recall.

- The region of direct access holds a limited number of chunks available to be used in ongoing cognitive processes.

- The focus of attention holds at any time the one chunk that is actually selected as the object of the next cognitive operation.”

Which is nicely explained in his figure:

A 2014 paper, suggests current thinking is that a better fit for experimental data might be WM as a limited resource that can be spread thinly (with more recall errors) or be more tightly focussed, rather than as register with slots for somewhere between 4 and 7 things. I suspect that if this turned out to be the case it would not much bother Oberauer because it may be no more than the oval in his diagram being drawn tighter or more widely spread.

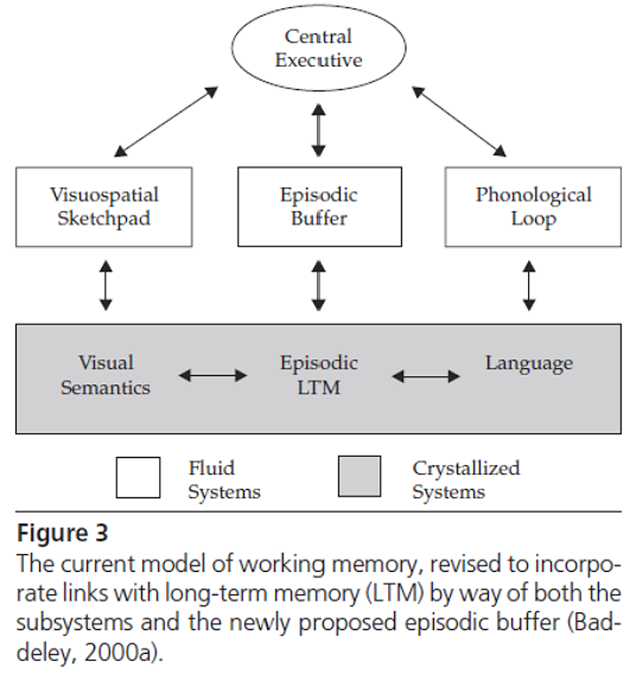

Baddeley’s Revised Model

The experimental evidence for a Working Memory Capacity discussed above caused Baddeley to revise his original model. Again from his 2012 review…

“Such results were gratifying in demonstrating the practical significance of WM, but embarrassing for a model that had no potential for storage other than the limited capacities of the visuo-spatial and phonological subsystems. In response to these and related issues, I decided to add a fourth component, the episodic buffer”

“The characteristics of the new system are indicated by its name; it is episodic in that it is assumed to hold integrated episodes or chunks in a multidimensional code. In doing so, it acts as a buffer store, not only between the components of WM, but also linking WM to perception and LTM. It is able to do this because it can hold multidimensional representations, but like most buffer stores it has a limited capacity. On this point we agree with Cowan in assuming a capacity in the region of four chunks.”

This change brings Baddeley’s model much closer to Cowan’s. Both have an unspecialised, four slot buffer or register, each slot (according to Baddeley, in both models) capable of holding more complex ideas than just a word or number, or as Baddeley puts it “multidimensional representations”. Baddeley regards this as a separate entity to the long term memory, and individual differences presumably follow from differences in the architecture of this entity, where Cowan sees it as an activated portion of the LTM and individual differences stem from differences in the ability to maintain focus on this activated area despite cognitive distractions.

Final Thoughts

The evidence for working memory being restricted is convincing. I have to say that my reading has me less convinced that working memory encompasses “multidimensional representations”, because all the evidence that I’ve seen has been for the retention of words, shapes and numbers.

I am more drawn to Cowan’s and in particular Oberauer’s model and like the idea that the differences these memory span tests show up are differences in focus rather than memory architecture. I don’t think I would be misrepresenting Conway (lead author on a couple of papers referenced here and a co-author with Cowan) in saying that he regards an ability to focus on what is important, in the face of requests to your internal executive for cognitive resources to be diverted elsewhere, is far more likely to correlate with all the intellectual skills that WMC has been shown to correlate with, than having one more space to remember things in.

Cowan’s model and Baddeley’s revised model downplay the structural differences in different forms of short term memory linked to different senses, in favour of a more generalised but limited tool that has direct links to Long Term Memory.

What I’ve Learnt 2 – Research and Learning

In my last post I argued that much of what teachers believe or are asked to believe stem from Theories of Learning that are not constrained by that pesky thing that scientists insist on: “evidence”.

So what evidence is there, and what does it tell us? Unfortunately it tells us distressingly little.

While I was thinking about how to structure this post Matt Perks (@dodiscimus) tweeted a link to a blog from Professor Paul Kirschner (@P_A_Kirschner):

Kirschner is one of the more famous educational psychology researchers amongst tweeting teachers for two reasons. One, because through his blogs and tweets he gets involved, and two, because of the relentless championing of one of his papers by teacher twitteratti bad boy Greg Ashman (@greg_ashman). Anyway this blog is Kirschner’s attempt to put together a list of “know the masters” papers for those starting out in educational psychology research. As @dodiscimus indicated it is not a short list, but what it does illustrate is the diversity of ideas that have informed psychologists’ thinking about learning. Gary Davis has described has described research into learning as “Pre-paradigmatic”, and while I know that Kirschner wasn’t aiming at a list that described educational psychology as it is today, you certainly don’t get the feeling from his list of masterworks that there is an overarching body of ideas that could be called a paradigm.

With no single paradigm to guide the design of experiments how do you approach finding and using evidence within the field of learning? There seem to be a number of responses to the problem:

Argue that humans are unique and chaotic and that therefore the ideas like “falsification” from the Physical Science simply don’t apply.

Dylan Wiliam (famous in teacher circles for his association with AfL – which is rarely questioned as anything other than a thoroughly good thing, and because like Kirschner he is willing to interact with teachers) has said at least once of education interventions that “Everything works somewhere and nothing works everywhere“. He went on to say it is the “why” behind this that is interesting, which is of course a reasonable scientific position, but it is the “Nothing Works Everywhere” bit that gets quoted with approval.

When I see “Nothing Works Everywhere” being quoted it tends to lead me into twitter spats, because I regard it as a dismissal of the scientific method and can’t help myself. However, I do think that very many teachers sincerely believe that all pupils are unique and that therefore there cannot be an approach to teaching or learning that is inherently better than another. That doesn’t mean that those teachers can not regard themselves as “research informed”, it is just that their research and its implementation are peculiar to them with little expectation of general applicability.

Forget Theory and Investigate “What Works”

If you believe in science does have something general to say about teaching, and moreover if you believe that it is extremely unlikely that evolution has endowed us all with utterly unalike brain architectures for learning, then you probably believe there are some ideas that work pretty much all of the time. This, of course, is what we want in order to practice as “research informed” teachers – some generally applicable ideas that work. It is just that finding them and demonstrating their general applicability is incredibly hard to do; humans are unique and chaotic etc etc.

How can you do it? Well ideally you do it on a massive scale so that when you say that the small effect size you have identified is significant the numbers mean that it probably really is. Unfortunately to conduct that kind of research requires government backing to tinker with education. I only know of one such and that is “Project Follow Through“, although governments can provide researchers with quasi-experiments by changing their education systems. For example, we all wait with bated-breath to find out what the result of Finland’s new emphasis on “generic competences and work across school” – or as the headlines have it “Scrapping Subject Teaching” will be. Unfortunately changes that weren’t designed as experiments have a tendency to be controversial and open to interpretation, probably because the results are so politically charged.

Project Follow Through found that highly scripted lessons with a lot of scripted teacher/pupil interaction worked best for the Primary School age kids in the programme and that the other approaches like promoting self-esteem and supporting parents, improved self-esteem, but not academic competence. How many of us are signing up to do our lessons from a script? I’m not. I am really not that committed to being research informed!

Alternatively, you can simulate a large scale experiment by combining the effect sizes of multiple small scale experiments testing the same ideas; meta-analysis. Most famously this was done by John Hattie in his 2008 book Visible Learning who combined meta-analyses within broad teaching ideas like “feedback” into a single effect size for each. “Fantastic” said many of us, including me, when this appeared, now we know – Feedback/good (0.73), Ability grouping/not so good (0.12), Piagetian Programs/great (1.28).

Unfortunately, Hattie’s results are hard to use. For example, what feedback is good feedback? We all give feedback, so it’s an effect size of 0.73 compared with never telling the kids how they’re doing? Who does that? Or is there some special feedback, perhaps with multi-coloured pens, that’s really, really good? The EEF found this to be a problem when they ran a pilot scheme in a set of primaries “The estimated impact findings showed no difference between the intervention schools and the other primary schools in Bexley” “Many teachers found it difficult to understand the academic research papers which set out the principles of effective feedback and distinguished between different types of feedback” “Some teachers initially believed that the programme was unnecessary as they already used feedback effectively.”

Or take Piagetian Programs – what are they? Matt Perks did some investigation and found that at least some of the meta-analyses that Hattie included in this category were actually correlational, i.e. kids who scored highly on tests designed to measure Piagetian things like abstract reasoning did well at school. Well duh, but how does that help?

So Hattie’s meta-analysis of meta-analyses is too abstracted from actual teaching to be really useful. Many other meta-analyses are open to contradiction, which leads to the question, are there any that are uncontroversial and tell us something useful? Well yes. The very narrow “How to Study” or Revision field of learning does seem to throw up consistent results, as summarised in the work of John Dunlosky. Forcing yourself to try and recall learning helps you recall it later whether you succeeded or not (low stakes testing), and spacing learning out is better than massed practice. A group of academics calling themselves the “Learning Scientists” do a great job of getting these results out to teachers. However, even they find that just two results don’t make for an interesting and regular blog and so stray into blogging on research that is much less certain.

Finally in “What Works”, if you can’t go massive, and you don’t trust metas, then you need to conduct a randomised and controlled trial (RCT) where the intervention group and the control group have been randomly assigned, but in such a way that both groups have similar starting characteristics. It is RCTs that the EEF have been funding as “Effectiveness Trials” and the results have so far been disappointing. I’m sure that some of my disappointment is because I have been conditioned by Hattie to expect big effect sizes. Obviously most of the effect sizes Hattie included were for post-intervention verses the starting condition, rather than post-intervention verses control, and therefore bound to be bigger. However, even allowing for that, the EEF doesn’t seem to have found much that is any better than normal practice. My biggest disappointment was “Let’s Think Secondary Science” which was a modernisation of CASE (Cognitive Acceleration through Science Education). At the 2016 ASE conference in January, those involved were very upbeat about their results so the assessment in August that said “This evaluation provided no evidence that Let’s Think Secondary Science had an impact on science attainment” was a real surprise. However, it was in line with many other EEF findings which tend to conclude that the teachers thought the intervention was excellent, but the outcomes were less convincing. This is even true for some of the studies that are trumpeted as successes like “Philosophy for Children” where the positives are very qualified, for example; “Results on the Cognitive Abilities Test (CAT) showed mixed results. Pupils who started the programme in Year 5 showed a positive impact, but those who started in Year 4 showed no evidence of benefit.”

Go back to Theory

As I see it the final option, the one that I see most often being reported, and to my mind the one that ResearchEd promotes, is to do what I said most of us do, in my previous blog, adopt a theory. Of course any principled educator/scientist is going to pick a theory which they believe has solid evidence behind it, but if you pay any attention to science at all you’ll know that Psychology has a massive reproducibility issue. Therefore if you’ve picked on an evidence backed piece of psychology theory to hang your teaching or possibly your research career around, you know there is a risk that that evidence is far from sound. But don’t let that stop you from being dogmatic about it!

What I’ve Learnt – 1 Theories of Learning

I’ve been a Physics teacher for ten years and head of one of the school’s more successful subjects for the majority of that time. Not having done a PGCE means that I’ve had to work a lot out for myself – when I started I didn’t even know what pedagogy meant. These days I’m confident that I’m reasonably well informed, so what have I learned? I’ll start with theories of learning – in a nutshell – I don’t think there is much science behind them, but we all use them.

Before becoming a teacher I’d done a few jobs including post-doc scientist and zoo tour guide. Coming to teaching late I didn’t do the traditional PGCE – supposedly I was on a Graduate Training Course, but the truth is I was just bunged into a classroom and told to get on with it. In my first year I was evenly split between Maths, Chemistry and Physics. I’ve taught others since, but have concentrated on Physics.

The result of all of this was that I was the most reactionary teacher you’ve ever met, my only experience of teaching before I joined a pretty traditional school was my own grammar school twenty years earlier! You know that progressive/traditional debate – well I didn’t know there was any way except the traditional.

Having survived my first experiences of teaching I was struggling to reconcile my experience in the classroom with CPD that continually exhorted me to organise group work and get the kids to discuss and debate the subject. I realised that I’d best learn something about teaching theory – I had been a scientist after all.

So how do you conduct research? You start with a literature review.

Oh my goodness the literature. It isn’t like scientific literature at all; the continual name dropping and referencing within the text, the asides to sociological theory and the failure to ever use a short word when a longer one is available. I couldn’t make my mind up; was this stuff incredibly profound, and I was just too ignorant to understand it, or was it was really as thin on ideas as it seemed.

I’m still not sure which it is, but I know from other blogs that I’m not the only one to have reacted this way. See for example Gary Davies, the third section of this blog is titled “Education research papers are too long and badly written”!

There were some ideas, however, so what were they?

- Knowledge is constructed by each individual (Piaget)

- The construction of Knowledge occurs socially (Vygotsky)

- Knowledge is constructed only from the subjective interpretation of an individual’s active experience (von Glaserfeld)

- Knowledge is an individual’s or a society’s and is therefore relative not absolute.

- That the brain develops through stages and that the onset of abstract reasoning is not until the teens. (Piaget)

- That skills are more desirable educational outcomes than knowledge (Bloom)

- That the way to learn skills is to ape the behaviour of experts (in Science Education I blame Nuffield).

These are clearly based on theories about the way that learning operates, and so seem like science. But they’re not. To my mind the process runs the wrong way around. By which I mean that it is the theory that is all important, rather than the process of obtaining evidence for or against the theory, as is the case in the Physical Sciences. In Physics similar accusations of “not being scientific” have been made against String Theorists, but I doubt that there is String Theorist anywhere who is not aware of the problem – they organise conferences to discuss it – Educationalists seem utterly unaware or unconcerned.

In fact there is only one of the above about which there seems to be any consensus at all about evidence; the fifth. The Edu-twitteratti would have it that the evidence is completely against developmental stages. Which is a shame because it is the only one I liked! Number five seemed to me to have explanatory power for what I was seeing in the classroom. In fact, authors that science teachers still swear by, Driver or Shayer for example, took a position when they were writing in the Eighties where 5 was accepted as truth.

When I looked into why 5 was so completely ruled out, I discovered that research, mostly centred on tracking babies’ eyes, has shown to everyone’s satisfaction that Piaget massively underestimated what babies can do cognitively when defining the earliest of his stages. How this is a killer blow for abstract reasoning being a teenage onset thing I’m not sure, but I guess it is another example of theory being king – reject one small portion of a theory – reject the theory.

I also suspect another reason for developmental stages being out of fashion: Piaget’s implication that children are cognitively limited doesn’t chime with what most educators want to believe, so 5 is gone.

What about the rest? Well it doesn’t take a genius to realise that if theories 1 through 4 dominate educational thought, then students discovering things for themselves (1&3), in groups (2), without a teacher acting as an authority figure declaiming the truth (4) is the ideal model for how learning should operate. No wonder I was continually being exhorted to organise group work. Meanwhile 6 & 7 explained all that emphasis that a science teacher is supposed to put on investigations and on “How Science Works”. Students need to learn “scientific literacy” not the facts of science.

When you think about it, it is quite liberating to do theory driven education. Pick a theory you like, it doesn’t have to be one of the seven above, it can be anything with a touch of logical consistency about it and at least one obscure journal paper as “evidence”. Remodel your teaching to suit, develop materials, convert your fellow teachers and no doubt your passion will mean that your outcomes improve. Before you know it you’re a consultant, write a book, write two, market a system, save the world!

It is also quite hard to teach without a theory, how do you proceed if you have no idea how knowledge can be inculcated? I suspect, consciously or not, that we all have an idea (maybe it is not fleshed out into a full blown theory) about how learning and the development of knowledge occurs, and we teach accordingly.

So I’ve been at this ten years, what is my unscientific theory of learning?

It is sort of Piaget and runs…

- We carry within us a model of how we think the world works, any new knowledge has to be consistent with that model.

- The most likely fate for knowledge that is inconsistent with the existing model is its rejection, perhaps with lip service being paid to pacify the teacher. Only very rarely will the model be adapted to match the new knowledge

- Knowledge is individually constructed upon the existing model, but the “experience” of the new knowledge can be reading, watching or hearing, it need not be physically experienced or discovered.

- Oh and I’m a scientist so there is such a thing as objective truth, most knowledge is not relative.

Am I prepared to defend this theory – yes. Do I secretly still think that abstract reasoning is a teenage phenomenon – yeah secretly. Do I believe my theory is true – I hope it contains grains of truth, but as a good scientist I have to admit it might be complete nonsense.

Am I somewhat ashamed to be found to be holding views unsupported by science – yes, but if I’ve learnt anything from reading Piaget it is that kids will defend utterly ridiculous theories of how the world works and will fight to maintain the logical consistency of those theories while flying in the face of facts – as adults I imagine we are just better at disguising the process.