Well actually I don’t, I say Schemas. I honestly didn’t realise, until I started researching them, that schemata was the plural of schema!

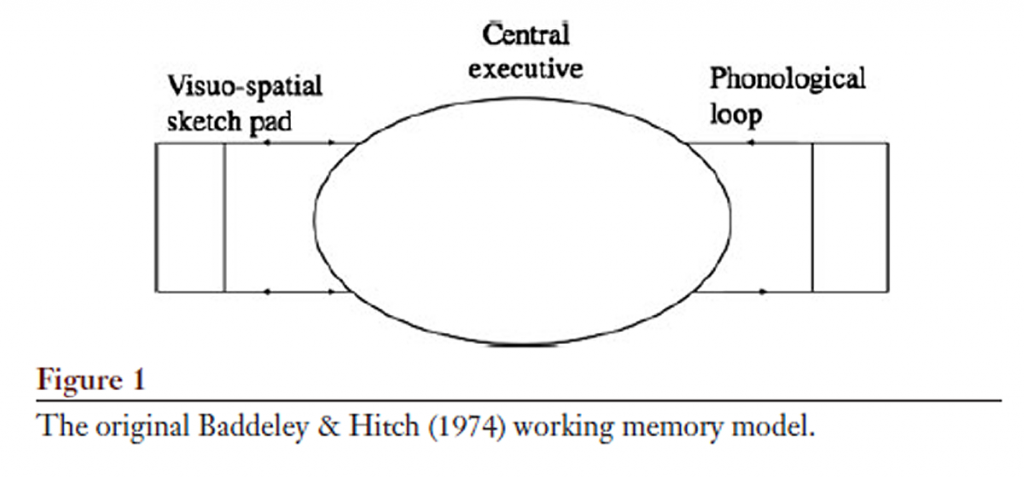

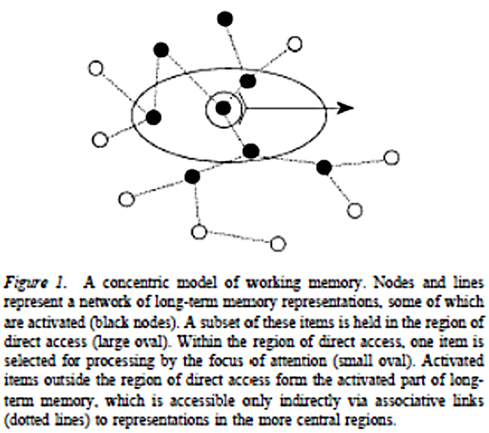

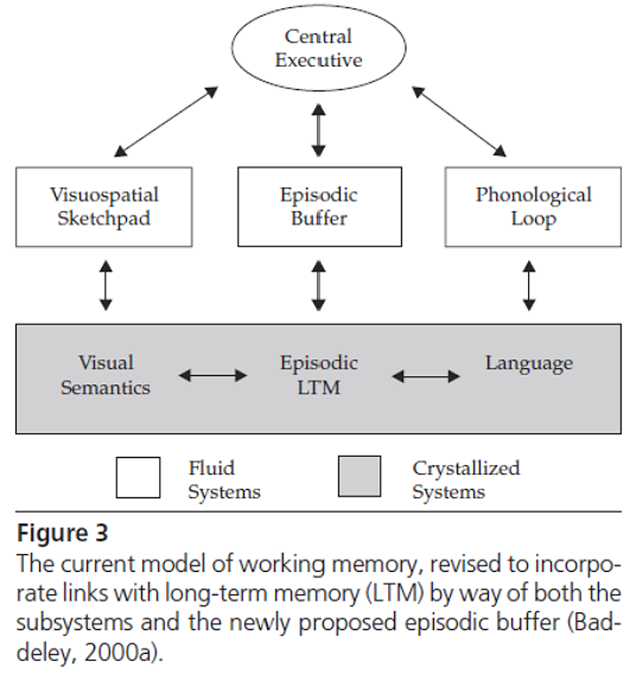

Anyway why are they significant? Well in my post about Working Memory I said Baddeley asserted that amongst the limited number of items being held in the Working Memory, and therefore available for manipulation and change, could be complex, interelated ideas; schemas.

Allowing more complex memory constructs to be accessed is particularly important for Cognitive Load Theory, because if working memory is really limited to just four simple things, numbers or letters, then Working Memory cannot be central to the learning process in a lesson. What is going on in a lesson is a much richer thing than could captured in four numbers. The escape clause for this is to allow the items stored in, or accessed by, Working Memory to be Schemas or schemata.

A schema is then a “data structure for representing generic concepts stored in memory” and they “represent knowledge at all levels of abstraction” (Rumelhart: Schemata The Building Blocks of Cognition 1978).

How We Use Schemas

To give an example of what schema is and how it is used we can take an example from Anderson (when researching this I was pointed at Anderson by Sue Gerrard), he developed the schema idea in the context of using pre-exisiting schemas to decode text.

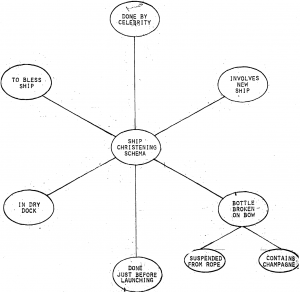

In 1984 Anderson and Pearson gave an example containing British ship building and industrial action, something only those of us from that vintage are likely to appreciate. The key elements that Anderson and Pearson think someone familiar with christening a ship would have built up into a schema are shown in the figure:

They then discusses how that schema would be used to decode a newspaper article:

‘Suppose you read in the newspaper that,

“Queen Elizabeth participated-in-a long- delayed ceremony

in Clydebank, Scotland yesterday. While there is bitterness here following the protracted strike, on this occasion a crowd of shipyard workers numbering in the hundreds joined dignitaries in cheering as the HMS Pinafore slipped into the water.”

It is the generally good fit of most of this information with the SHIP CHRISTENING schema that provides the confidence that (part of) the message has been comprehended. Particular, Queen Elizabeth fits the <celebrity> slot, the fact that Clydebank is a well known ship building port and that Shipyard workers are involved is consistent with the <dry dock> slot, the HMS Pinafore is obviously a ship and the information that it “slipped into the “water”,is consistent With the <just before launching> slot. Therefore, the ceremony mentioned is probably a ship christening. No mention is made of a bottle of champagne being broken on the ship’s bow, but this “default” inference is easily made.’

They expand by discussing how more ambiguous pieces of text with less clear cut fits to the “SHIP CHRISTENING” schema might be interpreted.

Returning to Rumelhart, we find the same view of schemas as active recognition devices “whose processing is aimed at the evaluation of their goodness of fit to the data being processed.”

Developing Schemas

In his review Rumelhart states that:

‘From a logical point of view there are three basically different modes of learning that are possible in a schema-based system.

- Having understood some text or perceived some event, we can retrieve stored information about the text or event. Such learning corresponds roughly to “fact learning” Rumelhart and Norman have called this learning accretion

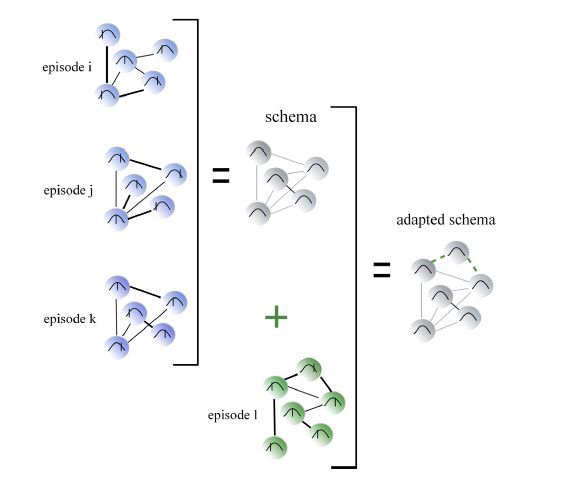

- Existing schemata may evolve or change to make them more in tune with experience….this corresponds to elaboration or refinement of concepts. Rumelhart and Norman have called this sort of learning tuning

- Creation of new schemata…. There are, in a schema theory, at least two ways in which new concepts can be generated: they can be patterned on existing schemata, or they can in principle be induced from experience. Rumelhart and Norman call learning of new schemata restructuring‘

Rumelhart expands on accretion:

‘thus, as we experience the world we store, as a natural side effect of comprehension, traces that can serve as the basis of future recall. Later, during, retrieval we can use this information to reconstruct an interpretation of the original experience -thereby remembering the experience.’

And tuning:

‘This sort of schema modification amounts to concept generalisation – making a schema more generally applicable.’

He is not keen on “restructuring”, however, because Rumelart regards a mechanism to recognise that a new schema is necessary as something beyond schema theory, and regards the induction of a new schema as likely to be a rare event.

A nice illustration (below) of Rumelhart’s tuning – schemas adapting and becoming more general as overlapping episodes are encountered – comes from Ghosh and Gilboa’s paper which was shared by J-P Riodan. Ghosh and Gilboa’s work looks at how the psychological concept of schema as developed in the 70s and 80s might map onto modern neuroimaging studies, as well as giving a succinct historical overview.

If accretion, tuning and restructuring is starting to sound a bit Piagetian to you “assimilation and accomodation” for “tuning and restructuring” then you would be right. Smith’s Research Starter on Schema Theory (I’ve accessed Research Starters through my College of Teaching Membership) says that Piaget coined the term schema in 1926!

It would be a lovely irony if the idea that allowed Cognitive Load Theory to flourish were attributable to the father of constructivists. However, Derry (see below) states that there is little overlap in the citations of Cognitive Schema theorists, like Anderson and later Sweller, and the Piaget school of schema thought, so Sweller probably owes Piaget for little except the name.

Types of Schema

In her in her 1996 review of the topic Derry finds three kinds of Schema in the literature:

- Memory Objects. The simplest memory objects are named p-prims by diSessa (and have been the subject of discussion amongst science teachers see for example E=mc2andallthat). However, above p-prims are “more integrated memory objects hypothesized by Kintsch and Greeno, Marshall and Sweller and Cooper, and others, these objects are schemas that permit people to recognise and classify patterns in the external world so they can respond with the appropriate mental of physical actions.” (Unfortunately I can’t find any access to the Sweller and Cooper piece.)

- Mental Models. “Mental model construction involves mapping active memory objects onto components of the the real-world phenomenon, then reorganising and connecting those objects so that together they form a model of the whole situation. This reorganizing and connecting process is a form of problem solving. Once constructed, a mental model may be used as a basis for further reasoning and problem solving. If two or more people are required to communicate about a situation, they must each construct a similar mental model of it.” (Derry connects mental models to constructivist teaching and again references DiSessa – “Toward an Epistemology of Physics”, 1993)

- Cognitive Fields. “…. a distributed pattern of memory activation that occurs in response to a particular event.” “The cognitive field activated in a learning situation .. determines which previously existing memory objects and object systems can be modified or updates by an instructional experience.”

Now it is not clear to me that these three are different things, they seem to represent only differences in emphasis or abstraction. CLT would surely be interested in Cognitive Fields, but Derry associates Sweller with Memory Objects. While Mental Models seems to be as much about the tuning of the schema as the schema itself.

DiSessa does, however, have a different point of view when it comes to science teaching. Where most science teachers would probably see misconceptions as problems built-in at the higher schema level, DiSessa sees us as possessing a stable number of p-prims whose organisation is at fault, and that by building on the p-prims themselves we can alter their organisation.

Evidence for Schemas

There is some evidence for the existence of schemas. Because interpretation of text is where schema theory really developed, there are experiments which test interpretation or recall of stories or text in a schema context. For example Bransford and Johnson 1972 conducted experiments that suggest that pre-triggering the corrrect Cognitive Field before listening to a passage can aid its recall and understanding.

Others have attempted to show that we arrive at answers to problems, not via logic but by triggering a schema. One approach to this is via Wason’s Selection Task which only 10% of people correctly answer (I get it wrong every time). For example Cheng et al found that providing a context that made sense to people led them to the correct answer, and argue that this is because the test subjects are thus able to invoke a related schema. They term higher level schemas used for reasoning, but still rooted in their context “pragmatic reasoning schemas”.

I am not sure that any of the evidence is robust, but schemas/schemata continue to be accepted because the idea that knowledge is organised and linked at more and more abstract levels offers a lot of explanatory power in thinking about what is happening in one’s own head, and what is happening in learning more generally.

Schema theory also fits with the current reaction against the promotion of the teaching of generic skills. If we can only think successfully think about something if we have a schema for it, and schemas start off at least as an organisation of memory objects, then thought is ulitmately rooted in those memory objects, thought is context specific. I suspect that this may be what Cheng et al‘s work is confirming for us.