I’ve already written about “Working Memory” which is so central to Cognitive Load Theory. However, the key thing which I felt was unanswered by CLT is how does our memory of the lesson as an event, relate to, become abstracted into a change in the putative schema associated with that lesson? I’d love to say that I now have an answer to this, but I do feel that I’m a bit closer. I was also taken in a different direction having found the work of Professors Gias and Born of Tübingen University who work on sleep and memory (related article).

Memory Types

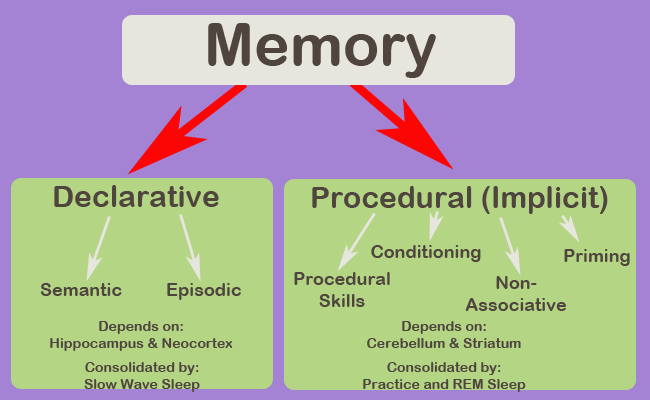

A starting point was that I was confused by terms like Procedural Memory and Declarative Memory, and I didn’t really know that Semantic Memory and Episodic Memory were things I should be thinking about until I read Peter Ford’s blog.

Endel Tulving, who is widely credited with introducing the term “Semantic Memory”, commented, in 1972, that in one single collection of essays there were twenty-five categories of memory listed. No wonder I was confused.

Today the main, usually accepted, split is between Declarative (or Explicit) and Procedural (or Implicit) Memories. Declarative Memory can be brought to mind – an event or definition, and Procedural Memory is unconscious – riding a bike.

Procedural Memory

The division between the two was starkly shown in the 1950s when an operation on a patient’s brain to reduce epilepsy led to severe anteriograde amnesia (inability to form new memories). That patient (referred to in the literature as H.M.) could, through practice, improve procedural skills (such as mirror drawing), even though he did not remember his previous practice (Acquisition of Motor Skill after Medial Temporal-Lobe Excision and discussed in Recent and Remote Memories).

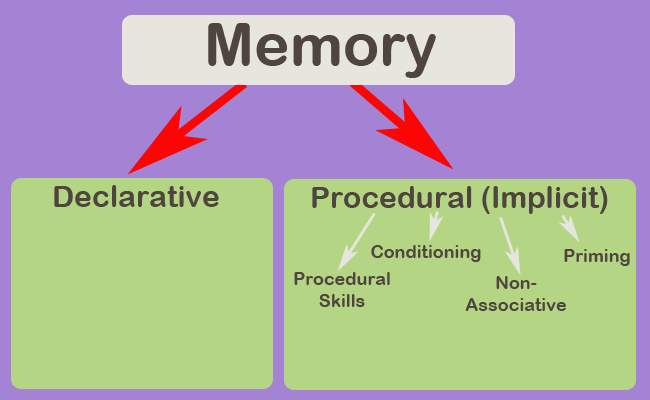

Similar studies have identified several processes which obviously involve memory, but which are unaffected by deficits in the Declarative Memory System of amnesiacs and, sometimes, drunks.

- Procedural Skills (see Squire and Zola below)

- Conditioning (as in Pavlov’s Dogs)

- Non-associative learning (habituation, i.e. getting used to and so ignoring stimuli, and sensitisation, learning to react more strongly to novel stimuli)

- Priming (using one stimulus to alter the response to another)

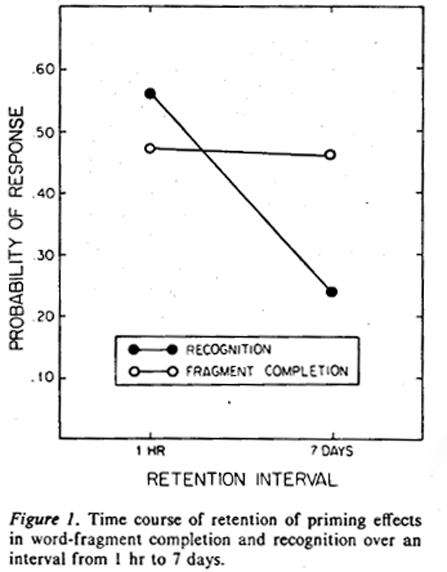

Priming was demonstrated by Tulving and co-workers in an experiment which I thought was interesting in a education context, he asked people to study a list of reasonably uncommon words without telling them why. They were then tested an hour later in two ways; with a new list and asked which words they recognised from the previous list, and by asking them to complete words with missing letters (like the Head to Head round in Pointless!) Unsurprisingly, words which had been on the study list were completed more effectively than new words (0.46 completion, vs 0.31). More interestingly, this over-performance on fragments of word completion due to having been primed by the study list was still present in new tests 7 days later. Performance on the recognition test, on the other hand, declined significantly over those seven days (see their Figure 1 below).

Tulving was using experiments designed by Warrington and Weiskrantz (discussed in “Implicit Memory: History and Current Status“), who found that amnesiacs did as well as their control sample in fragment completion, having been primed by a previous list which they did not remember seeing.

Tulving took the independence of performance on the word recognition tests and the fragment completion tests as good evidence that there were two different memory systems at play.

Squire and Zola give examples of learnt procedural skill tests which clearly requires memory of some type. For example:

In this task, subjects respond as rapidly as possible with a key press to a cue, which can appear in any one of four locations. The location of the cue follows a repeating sequence of 10 cued locations for 400 training trials. … amnesic patients and control subjects exhibited equivalent learning of the repeating sequence, as demonstrated by gradually improving reaction times and by an increase in reaction times when the repeating sequence was replaced by a random sequence. Reaction times did not improve when subjects were given a random sequence.

Structure and Function of Declarative and Nondeclarative Memory Systems

They also cite weather predictions from abstract cue cards, deducing whether subsequent letter sequences follow the same set of rules as a sample set, and identifying which dot patterns are generated from the same prototype, as procedural skills where amnesiacs perform as well as ordinary people despite not remembering their training sessions.

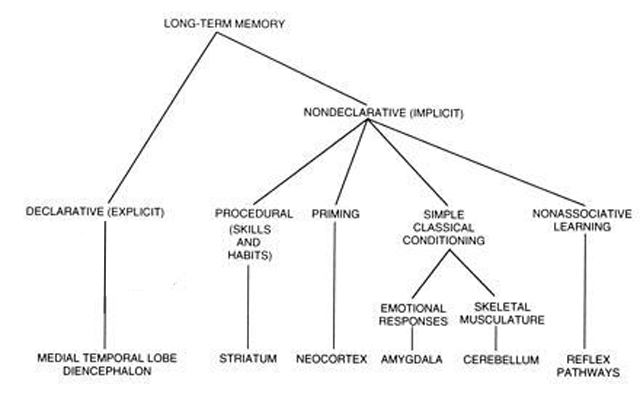

In fact such is the range of memory types identified Squire chooses to see “Procedural Memory as just a portion of the whole group and to refer to the whole group as “Non-Declarative”.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC33639/

Summary for Procedural (Non-Declarative or Implicit) Memory

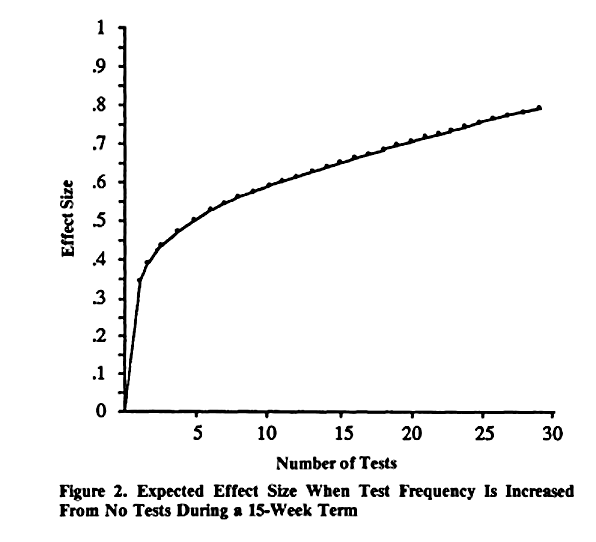

In summary, Procedural or Implicit Memory, the memory element of unconscious skills or reactions, is limited, but is more than just motor skills or muscle memory. There are priming and pattern recognition elements which could be exploited in an education context. In fact, I do wonder if the testing effect (retrieval practice) is not a form of priming, and therefore non-declarative.

Declarative Memory

As suggested earlier Tulving is credited with introducing the idea of Semantic Memory. He envisaged three memory types, with Semantic Memory, what we teachers mean by knowledge, dependent on Procedural Memory, and the final type, Episodic Memory, memory of events, dependent on Semantic Memory. Episodic Memory and Semantic Memory are now regarded interrelated systems of Declarative Memory.

In her 2003 Review Sharon Thompson-Schill neatly illustrated the difference between the two types of declarative memory as:

The information that one ate eggs

Neuroimaging Studies of Semantic Memory, Sharon L. Thompson-Schill

and toast for breakfast is an example of episodic memory,

whereas knowledge that eggs, toast, cereal, and pancakes are

typical breakfast foods is an example of semantic memory.

Episodic memory

That Episodic Memory is dependent on the hippocampus structure and the surrounding Medial Temporal Lobe has been recognised since since its excision in patient H.M., and modern brain imaging studies such as those reviewed by Thompson-Schill confirm this. As well as having specific cells dedicated to encoding space and time, the hippocampus seems to integrate the sensory inputs from widely spread sensory and motor processing areas of the brain, recognising similarity and difference with previous memory traces and encoding them accordingly, although the memory traces that make up the episode remain widely distributed in the relevant sensorimotor areas.

Over time changes in the encoding of the memory reduce its dependence on the hippocampus. The memory of an episode ends up distributed across the neocortex (the wrinkled surface of the brain) without a hippocampal element, hence H.M. retaining previous memories. This change is called systems consolidation (see consolidation).

Semantic Memory

The structures underlying Semantic Memory, even its very existence, are less clear cut. The possibilities (see review by Yee et al) are:

- That Semantic Memories are formed in a separate process from Episodic memory (for example, directly from Working Memory to Long Term Memory via the Prefrontal Cortex). Tulving took this view, but the evidence from amnesiacs is that for them to form new Semantic Memory without Episodic Memory is a slow and limited process.

- That the brain abstracts information from Episodic Memory, and sorts and stores it by knowledge domain. There is some evidence supporting this; brain damage in specific areas can damage memory of specific categories of object (e.g. living vs non-living).

- That the process of abstraction is specific to the sense or motor skill involved, possibly with some other brain area (the Anterior Temporal Lobe – ATL) functioning as an over-arching hub which links concepts across several sensorimotor abstractions (Patterson et al for a review of the ATL as a hub) . This seems to be the most widely accepted theory because it best matches the brain imaging data. This shows semantic memory operations activating general areas like the ATL and the relevant sensorimotor brain areas, but with the exact area of activation shifting depending on the level of abstraction.

- That there is no such thing as Semantic Memory and that what seems like knowledge of a concept is in fact an artifact arising from the combined recall of previous episodic encounters with the concept. Such models of memory are called Retrieval Models…

……retrieval models place abstraction at retrieval. In

“Semantic Memory”, Eiling Yee, Michael N. Jones, Ken McRae

addition, the abstraction is not a purposeful mechanism

per se. Instead, abstraction incidentally occurs because

our memory retrieval mechanism is reconstructive.

Hence, semantic memory in retrieval-based models is

essentially an accident due to our imperfect memory

retrieval process.

Both of the latter two possibilities have knowledge rooted in sensorimotor experience (embodied). How are purely abstract concepts treated in an embodied system? The evidence shows that abstract concepts with an emotional content, e.g. love, are embodied in those areas which process emotion, but those with less emotional content, e.g. justice, are dealt with more strongly in those regions of the brain known to support language:

It has therefore been suggested that abstract concepts for which emotional and/or sensory and motor attributes are lacking are

more dependent on linguistic, and contextual/situational information. That is, their mention in different contexts (i.e., episodes) may gradually lead us to an understanding of their meaning in the absence of

sensorimotor content. Neural investigations have supported at least the linguistic portion of this proposal. Brain regions known to support

language show greater involvement during the processing of abstract relative to concrete concepts.

“Semantic Memory”, Eiling Yee, Michael N. Jones, Ken McRae

Consolidation and Sleep

Memory consolidation is thought to involve three processes operating on different time scales:

- Strengthening and stabilisation of the initial memory trace (synaptic consolidation – minutes to hours))

- Shifting much of the encoding of the memory to the neo-cortex (systems consolidation – days to weeks).

- Strengthening and changing a memory by recalling it (reconsolidation – weeks to years)

Clearly all three of these are vital to learning, but the discoveries, and the terms for the processes involved, as used in neuroscience, have not yet made much of an impact on educational learning theory.

Synaptic Consolidation

Much of the information encoded in a memory is thought to reside in the strengths of the synaptic interconnections in an ensemble of neurons. The immediate encoding seems to be via temporary (a few hours) chemical alterations to the synapse strength. Conversion to longer term storage begins to happen within a few minutes by the initiation of a process called Long Term Potentiation (LTM), where new proteins are manufactured (gene expression) which make long lasting alterations to synapse strength.

Tononi and Cirelli have hypothesised that sleep is necessary to allow the resetting and rebuilding of the resources necessary for the brain’s ability to continually reconstruct its linkages in this way (maintain plasticity).

Systems Consolidation

Although subject to LTM the hippocampus is thought to only be a temporary store, Diekelmann and Born refer to it as the Fast Store which through repeated re-activation of memories trains the Slow Store within the neo-cortex. This shift away from relying on the hippocampus for the integration of memory is Systems Consolidation.

It is the contention of Jan Born’s research group that this Systems Consolidation largely occurs during sleep. They argue further that while Procedural Memory benefits from rehearsal and reenactment in REM sleep (which we usually fall into later during our sleep), it is the Slow Wave Sleep (SWS), which we first fall into, which eliminates weak connections, cleaning up the new declarative memories, and…

…. the newly acquired memory traces are repeatedly re-activated and thereby become gradually redistributed such that connections within the neocortex are strengthened, forming more persistent memory representations. Re-activation of the new representations gradually adapt them to pre-existing neocortical ‘knowledge networks’, thereby promoting the extraction of invariant repeating features and qualitative changes in the memory representations.

“The memory function of sleep” Susanne Diekelmann and Jan Born

Born is pretty bullish about the importance of sleep, in an interview with Die Zeit he said

Memory formation is an active process. First of all, what is taken during the day is stored in a temporary memory, for example the hippocampus. During sleep, the information is reactivated and this reactivation then stimulates the transfer of the information into the long-term memory. For example, the neocortex acts as long-term storage. But: Not everything is transferred to the long-term storage. Otherwise the brain would probably burst. We use sleep to selectively transfer certain information from temporary to long-term storage.

(Translation by Google).

“Without sleep, our brains would probably burst” Zeit Online

Reconsolidation

The idea that memories can change is not new, but the discovery in animals that revisiting memories destabilises them making them vulnerable to change or loss, has opened up new possibilities, such as the removal or modification of traumatic memories.

Nader et al’s discovery was that applying drugs which suppress protein manufacture to an area supporting a revisited memory left it vulnerable to disruption. This suggests that LTM-like protein production is necessary to reconsolidate a memory (make it permanent again) once that memory has been recalled, until then it can be changed.

That previously locked down memories become vulnerable to change (labile) when they are re-accessed, (if the process applies to semantic memory) might be the pathway for altering/improving memories which are revisited during revision, or might allow for the rewiring of stubborn misconceptions.

Conclusions / Implications for Teaching Theory

1 It is clear from the above that memory formation is not the very simplified “if it is in Working Memory it ends up in Long Term Memory” process that tends to be assumed as an adjunct to Cognitive Load Theory. However, a direct link between a Baddeley-type WM model and Long Term Memory formation is not precluded by any of the above. Many of the papers which I looked at stressed the importance of the Pre-Frontal Cortex (PFC), which is usually taken to the be site of Executive Control and possibly Working Memory, in memory formation. Blumemfeld and Ranganath, for example, suggest that the ventrolateral region of the PFC is playing a role in selecting where attention should be directed and in the process increasing the likelihood of successful recall.

2 I think it is clear that there is no evidence in the above for schema formation. However, there are researchers who believe that their investigations using brain scans and altering brain chemistry do show schema formation (the example given, and others, were cited by Ghosh and Gilboa). I have not included these studies, because I was not convinced I understood them, they did not appear in the later reviews I was reading, and they do not have huge numbers of citations according to Google Scholar.

3 What I do think the above does provide is a clear answer to the “do skills stand alone from knowledge” debate. A qualified no.

Procedural or Implicit memory does not depend on declarative memory – knowledge, and so those skills which fall within Procedural Memory, like muscle memory (Squire’s Skeletal Musculature Conditioning), are stand-alone skills.

These are the memories/skills which benefit most strongly from sleep, in fact Kuriyama et al found that the more complex the skill, the more sleep helped embed it. This might be a useful finding in some areas of education, e.g. introducing a new manipulative or sporting skill, but only using it to a day or two later, once sleep has had an effect.

However, Procedural Memory as discussed by a neuro-scientist is not the “memory of how to carry out a mathematical procedure” that a maths teacher might mean by procedural memory. This maths teacher meaning is purely declarative. Similarly the skill of reading a book rests upon declarative memory of word associations (amnesiacs with declarative memory impairment can only make new word associations very slowly).

4 I was surprised that “the immediate memory, to fast memory, to slow memory” process that molecular and imaging studies of the brain have elucidated does not match the model of Short Term Memory to Long Term Memory which I thought I knew from psychology. But I am not certain yet what difference that makes to my teaching, other than to think that we do not take sleep into account anything like enough when scheduling learning. Should we be moving on to new concepts, or even using existing concepts, before we have allowed sleep to act upon them? Do we risk concepts being overwritten in the hyppocampus by moving along too quickly?

5 Finally I thought that Reconsolidation was interesting. It might not make a big difference to the way we teach, but it might explain good practice, like getting the students to recall their misconception before attempting to address it. Reconsolidation could be a fertile area of investigation, I am sure that there must be lots of uses for it which I have not thought of.

That’s it. You have made it to the end, well done. Sorry that what started out as a small research project for myself turned into something larger. I had not realised quite how big a subject memory was, I’m not sure I’ve even scratched the surface.

If you want to read more about the nature of memory, consolidation and particularly LTM I recently found this 2019 review:

Is plasticity of synapses the mechanism of long-term memory storage?